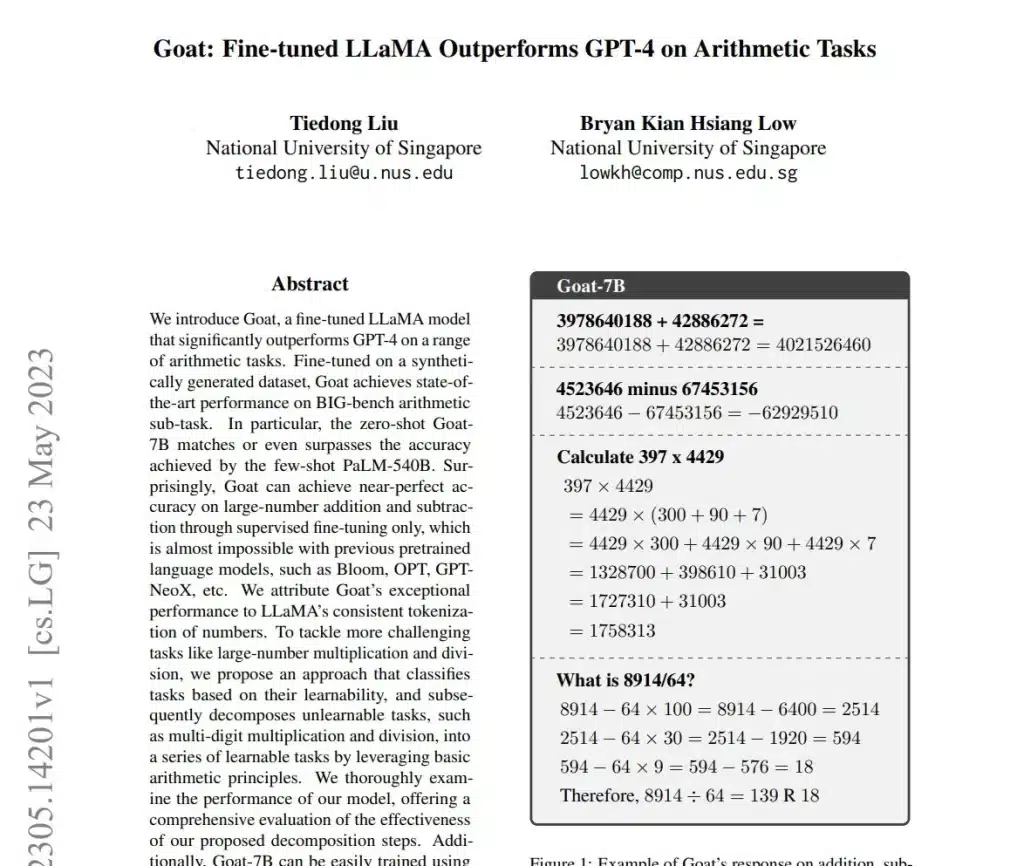

On June 8th, Gizcoupon reported that the current Achilles heel of the GPT-4 model lies primarily in its arithmetic capabilities. Because the logical reasoning ability of the model has yet to be improved, GPT-4 can’t get correct results even on what many people think of as relatively simple calculation problems. Recently, researchers from the National University of Singapore released an AI arithmetic model named GOAT. They claim it is “dedicated to arithmetic problem-solving.” The team expressed that they had fine-tuned the LLaMA model. Arithmetic model Goat has achieved higher accuracy and superior performance in arithmetic compared to GPT-4.

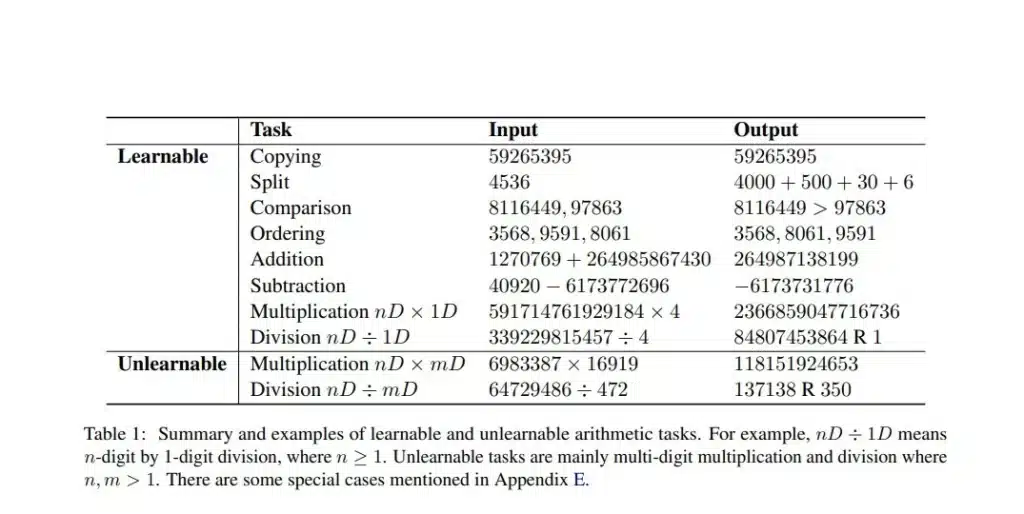

The researchers introduced a novel approach that categorizes tasks based on the learnability of arithmetic. They then decompose unlearnable tasks into a series of learnable tasks using fundamental arithmetic principles (Gizcoupon note: breaking down complex computations into simpler steps) before incorporating them into the AI model.

This new method enables the model to learn answer patterns and generalize the process to unseen data, surpassing mere “weight memorization computation.” Consequently, it effectively enhances arithmetic performance, achieving “near-perfect precision” in generating answers for large-scale addition and subtraction in zero-shot learning scenarios.

The researchers trained on GPUs with 24 GB of VRAM. The final model was tested using BIG-bench arithmetic subtasks, exhibiting remarkable accuracy results. It is ahead of models like Bloom, GPT-NeoX, OPT, etc. in the industry. Notably, the zero-shot Goat-7B model even surpassed the post-few-shot learning PaLM-540 model in accuracy. Moreover, it far exceeds GPT-4 in large-scale computations.

Readers of Gizcoupon can find the link to the research paper here.