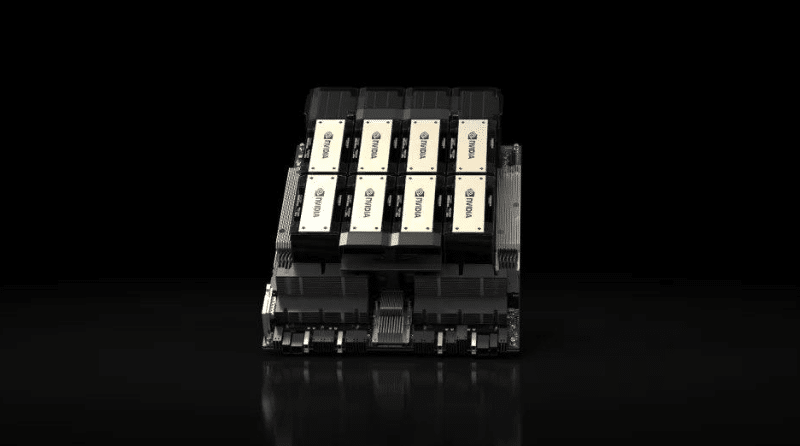

During the 2023 Global Supercomputing Conference (SC2023), NVIDIA, the chip giant, unveiled the successor to the H100 chip—the world’s current most powerful AI chip—known as the NVIDIA H200.

In direct comparison to its predecessor, the H200 exhibits a remarkable performance ranging from 60% to 90%. Moreover, both H200 and H100 operate on Nvidia’s Hopper architecture, ensuring seamless compatibility between the two chips. This compatibility allows companies currently utilizing H100 to effortlessly transition to the latest H200.

Adding to its advancements, H200 marks NVIDIA’s pioneering use of HBM3e memory. This memory is not only faster and more capacious but also better suited for training or inference tasks involving large language models.

In conjunction with the HBM3e memory, the memory capacity of H200 stands at 141GB, experiencing a bandwidth surge from H100’s 3.35TB/s to an impressive 4.8TB/s.

The enhanced performance of H200 is particularly evident in the realm of large model inference. On the extensive Llama2 model boasting 70 billion parameters, H200’s inference speed doubles that of H100, leading to a 50% reduction in inference energy consumption. For memory-intensive HPC applications, H200’s heightened memory bandwidth guarantees efficient access to operational data. This efficiency translates to a potential 110-fold increase in result retrieval speed compared to CPUs.

Anticipated to be shipped in the second quarter of 2024, Nvidia has yet to disclose the pricing details for H200. However, amidst the ongoing computing power shortage, major technology companies are expected to proactively secure their supplies.

Read Also: How to Upgrade Your PC’s Hardware: RAM, SSD, Graphics Card, etc.